Key Takeaways

Signal compression operates at the crucial crossroads of engineering precision and strategic information management. It offers not only technical solutions to reduce data load, but also a disciplined mindset: transforming overwhelming volumes of information into meaningful, actionable insight. The following key takeaways capture the discipline’s foundational techniques, practical benefits, and far-reaching relevance for professionals seeking clarity and efficiency in how data is handled.

-

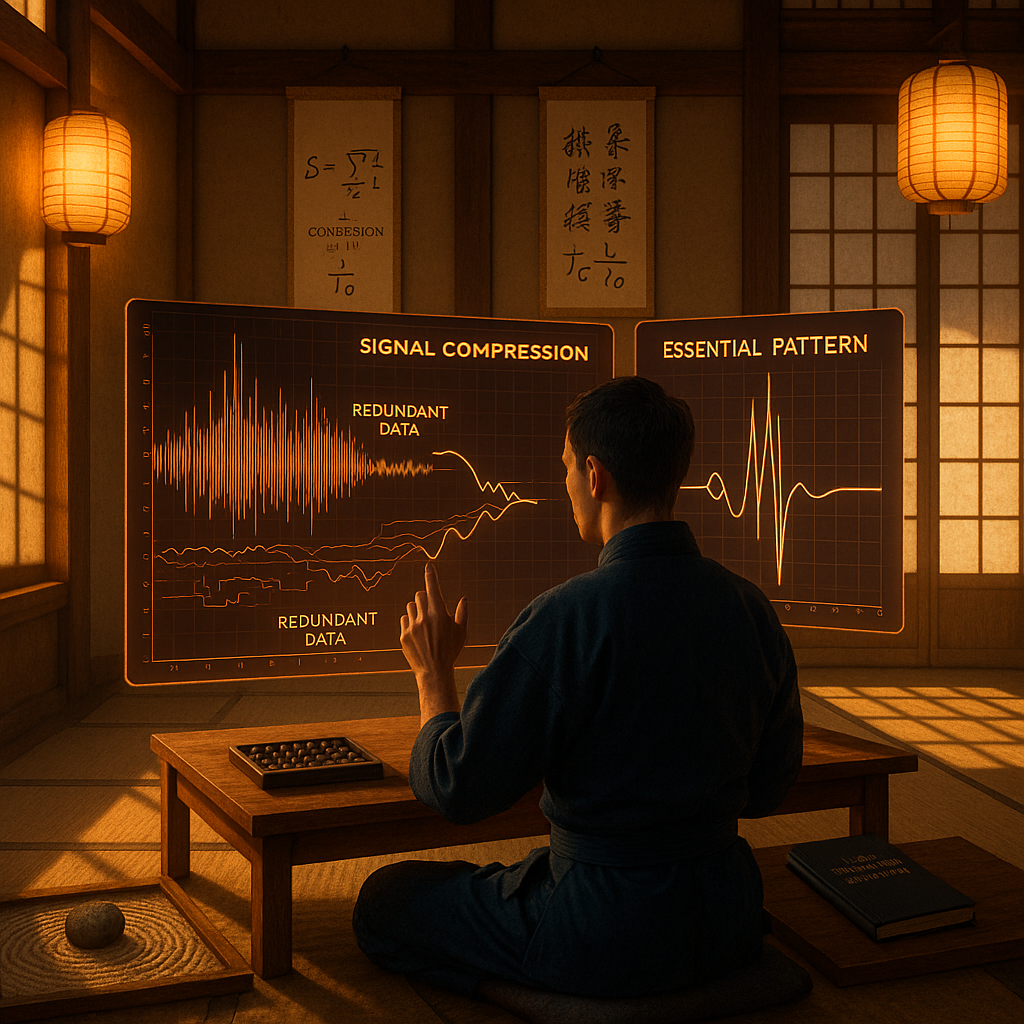

Engineered efficiency: Compression maximizes value and trims redundancy. By identifying and eliminating predictable or irrelevant data, signal compression enables dramatic reductions in storage and bandwidth requirements, all while preserving the essential integrity of information. This principle ensures that resources are focused on what truly matters, whether managing live sensor streams or archiving massive data sets.

-

Technique toolkit: From quantization to principal component analysis. Core methods include quantization (simplifying signal values for efficiency), linear compression (transform-based approaches like Principal Component Analysis), and advanced algorithms that balance data fidelity with resource constraints. These tools form a flexible arsenal to address diverse compression challenges across sectors.

-

Beyond bandwidth: Compression powers dynamic, resource-smart systems. Modern technology ecosystems rely on robust compression to transmit, store, and process huge volumes of audio, video, and sensor data. This capability unlocks scalability and responsiveness in industries as varied as telecommunications, cloud computing, medtech, and the internet of things.

-

Compression as a strategy: From noise to clarity. By distilling vast, noisy inputs into succinct, relevant signals, compression serves as both metaphor and method for organizational leaders. It enables businesses to overcome information overload, sharpening focus on actionable trends and decisions.

-

Advanced techniques: Preserving signal integrity with dynamic range and gain compression. Specialized methods protect signal quality and prevent distortion in real-time communications, ensuring that even under variable loads, critical data remains clear and actionable.

-

Digital implementation bridges theory and practice. The evolution of digital compression algorithms underpins fast, practical solutions. Integrated across devices and industries, digital implementations allow for tailored adaptation, improving computation, delivery speed, and relevancy of information from cloud storage to edge devices.

Signal compression is more than a technical solution. It is a disciplined approach to extracting clarity from complexity and transforming both data streams and decision-making processes. In the following sections, you will discover how these principles support not just technological progress, but smarter business strategies and sustainable innovation.

Introduction

Every digital interaction (whether you are streaming video content, monitoring patient vitals in real time, or sending secure communications) rests on the silent work of signal compression. This unsung engine of modern information management determines which details survive, what gets streamlined, and how efficiently critical data speeds from origin to destination.

Far from a mere bandwidth-saving tactic, signal compression is foundational to scalable technology and sharper decision-making across fields like finance, healthcare, retail, and beyond. By systematically stripping away redundancy and honing in on meaningful content, these techniques transform raw, overwhelming data into concise and actionable insights. Let’s journey through the mechanics of core compression techniques (such as quantization, dynamic range control, and linear algorithms) to understand how they connect the reality of big data with the promise of high-value outcomes.

Stay Sharp. Stay Ahead.

Join our Telegram Channel for exclusive content, real insights,

engage with us and other members and get access to

insider updates, early news and top insights.

Join the Channel

Join the Channel

Foundations of Signal Compression

Signal compression is a sophisticated discipline that reduces data volume while retaining the essential quality and content of information. At its heart, compression leverages redundancy and predictability within signals, replacing superfluous details with efficient representations. This selective reduction, guided by the principle that not all data points carry equal importance, ensures signal integrity even as data demands escalate.

A key performance metric is the compression ratio, which compares the original and compressed data sizes. In practical terms, engineers may target ratios from 2:1 (for lossless requirements) to 100:1 (when some information loss is acceptable), calibrating approaches based on application needs and tolerance for quality degradation. This mirrors strategic information management, where leaders must efficiently separate high-value indicators from background noise.

Three primary forms of redundancy enable effective compression across technical and enterprise contexts:

- Statistical redundancy: Patterns and sequences that repeat predictably, enabling efficient data summarization. For example, repeated patterns in financial time series or recurring signals in industrial machinery can be compressed with minimal loss.

- Perceptual redundancy: Elements that human senses cannot distinguish, such as certain audio frequencies or visual details. Medical imaging leverages perceptual models to store diagnostic-quality images while minimizing unused detail.

- Structural redundancy: Patterns arising from data organization or formatting. In legal records or structured datasets, knowing the inherent structure allows more effective compression and rapid retrieval.

Understanding these forms of redundancy empowers professionals in multiple sectors to optimize data management, surface actionable insights, and maintain both technical and operational performance.

Key Techniques and Algorithms

Lossless Compression

Lossless compression guarantees perfect reconstruction of the original data, making it indispensable wherever data integrity is non-negotiable. Common algorithms include:

- Run-length encoding (RLE): Encodes sequences of repeated data, commonly used in image files and telemetry logs.

- Huffman coding: Employs variable-length encoding for symbols based on frequency, ideal for language and protocol data.

- LZW compression: Uses a dictionary-based approach to identify and replace repeated patterns, widely adopted in file compression and storage.

Typical compression ratios range from 2:1 to 8:1, balancing storage optimization and the need for faithful reconstruction. This makes lossless compression a critical choice in sectors like finance (for transaction logs), legal services (for contracts and discovery files), and healthcare (for diagnostic data).

Lossy Compression

Lossy algorithms purposefully discard non-critical data, achieving higher compression ratios at the cost of some detail. Transform-based approaches, like Discrete Cosine Transform (DCT) for image and video compression or wavelet transforms for multi-scale data analysis, dominate this domain.

Industries benefit from compression ratios up to 50:1 or even 100:1, so long as the result remains fit for purpose. In education, video lectures may be streamed at lower bitrates for remote learners; in marketing, high-resolution graphics are optimized for fast web performance.

Dynamic range compression specifically targets variations in signal amplitude, enhancing clarity in audio recordings or visual displays. For example, in telemedicine, dynamic range compression ensures doctor-patient conversations remain intelligible regardless of network fluctuations.

Signal Compression in Action

Implementing signal compression demands diligent consideration of system constraints and performance goals. For real-time applications (like video conferencing, emergency broadcasts, or industrial process monitoring), engineers must weigh:

- Processing overhead versus compression effectiveness

- Latency requirements versus achievable compression ratio

- Resource utilization against the acceptable quality of transmitted data

Real-time systems face distinct operational hurdles. For example, a financial trading platform must compress and transmit large volumes of tick data with sub-millisecond delay to preserve competitive advantage. A telemedicine system, on the other hand, ensures reliable, timely patient diagnostics without sacrificing image quality.

Technical Considerations

- Buffer management and memory optimization: Efficiently handles influxes of data, vital for industrial IoT sensors and healthcare monitoring.

- Hardware acceleration: Customized hardware, such as GPUs and FPGAs, speeds up compression tasks in time-sensitive sectors like autonomous vehicles and robotics.

- Error resilience: Compression algorithms often incorporate recovery mechanisms, essential in aerospace communications and remote environmental monitoring.

- Adaptive compression: Algorithms adjust to changing network or system conditions, crucial for mobile operators, cloud services, and emergency response systems.

Real-World Applications

The impact of signal compression extends across a spectrum of industries:

Healthcare Analytics

- Enhanced storage efficiency for high-resolution diagnostic imaging (e.g., radiology, MRI scans), maintaining detail for clinical accuracy.

- Real-time patient monitoring, where multichannel data streams are compressed for rapid alerts and cloud-based analysis.

- Streamlined electronic health records, allowing for secure, efficient, and compliant information sharing between providers.

Financial Markets

- Compression of high-frequency trading data, ensuring quick access and reduced latency for automated trading models.

- Optimization of real-time and archival market feeds, minimizing network congestion and storage costs.

- Selective precision archiving for regulatory compliance, balancing detail and efficiency.

Industrial IoT

- Sensor network optimization, where compressed data extends battery life and expands device reach in manufacturing or resource extraction.

- Predictive maintenance, analyzing compressed signal histories to forecast equipment failures and reduce downtime.

- Resource-constrained edge computing, compressing image or sound data for localized AI processing in sectors from agriculture to urban transport.

Education and E-Learning

Stay Sharp. Stay Ahead.

Join our Telegram Channel for exclusive content, real insights,

engage with us and other members and get access to

insider updates, early news and top insights.

Join the Channel

Join the Channel

- Scalable streaming of lectures and learning resources, making high-quality content accessible to diverse learners even in low-bandwidth environments.

- Compression of student performance data, facilitating real-time feedback and adaptive instruction.

Legal and Compliance

- Managing massive digital discovery file volumes through structured compression, accelerating document review.

- Ensuring verifiable, intact archiving of contracts and correspondence.

Marketing and Retail

- Fast-loading, data-light digital advertising, driving engagement on mobile devices.

- Real-time compression for point-of-sale systems and inventory management, improving operational speed and reducing infrastructure costs.

Environmental and Scientific Research

- Efficient transmission of climate sensor data from remote field stations.

- Compression of high-resolution satellite or drone imagery for rapid ecological analysis.

Signal Compression as a Framework

Beyond its technical utility, signal compression offers a pragmatic lens for organizational and strategic leadership. Much like an engineer designing an optimal compression algorithm, business leaders must:

- Filter signal from noise.

- Identify the most relevant performance indicators.

- Eliminate distractions and reduce complexity for sharper decisions.

- Prioritize resources where they drive maximum impact.

- Optimize information flow.

- Streamline communication channels for clarity and speed.

- Adapt reporting structures to reduce cognitive load and accelerate action.

- Foster a culture that values clear, focused data over overwhelming volume.

- Enable scalable growth.

- Manage information surges as organizations expand or encounter new data sources.

- Preserve decision quality even under rapid change or pressure.

- Build adaptive systems ready to evolve with technological or market trends.

The trajectory of signal compression is being rapidly shaped by advances in quantum computing and AI-driven adaptive algorithms. These innovations promise leaps in efficiency and contextual awareness, mirroring the growing necessity for robust, agile information management in complex industries. For those curious how these principles translate to the realm of price data, chart construction, and market pattern minimization, see the core article on technical analysis.

Conclusion

Signal compression stands as both a technical imperative and a strategic mindset, empowering engineers and decision-makers to optimize the flow of critical information while preserving the core of what truly matters. By mastering the balance between fidelity and efficiency (choosing when to use lossless techniques versus lossy strategies), organizations can thrive in environments shaped by data intensity and constant change.

From healthcare imaging and financial transactions to smart infrastructure and digital education, real-world deployments illustrate that skillful compression not only saves resources but enables flexibility, innovation, and growth. As quantum breakthroughs and AI-powered tools set new standards, those prepared to apply the timeless principles of signal compression will cut through noise, focus on the essentials, and foster resilience in the face of complexity. The future belongs to those who not only manage data, but refine it into clear, decisive knowledge. The real challenge is not just filtering out the noise, but learning how to channel clarity into action within your own domain. Are you ready to compress complexity and unlock your edge?

Leave a Reply